The BIAS project will follow an interdisciplinary research and impact methodology.

The research methodology is based on the following pillars

The creation of national labs in each country (communities of practitioners, employees, HRM, and AI specialists with a special focus on underrepresented communities). Members of the National Labs, and other interested stakeholders, will participate in needs analysis and stakeholder involvement through surveys, interviews and co-creation workshops.

AI research and development with a focus on Natural Language Processing (NLP) and Case-based Reasoning (CBR).

Ethnographic fieldwork with employers, employees, and AI developers from different European countries providing information about current experiences and future scenarios of the BIAS model and AI in employment settings to PhDs and researchers.

The creation of the Debiaser, our proof-of-concept technology with modules that both identify and mitigate bias and unfairness in decision making, that will be made available to the AI community.

On the importance of tackling gender and intersectional biases in AI

To equip the AI and HRM community with tools to prevent bias in AI

That companies can use to reduce biases in their HR practices

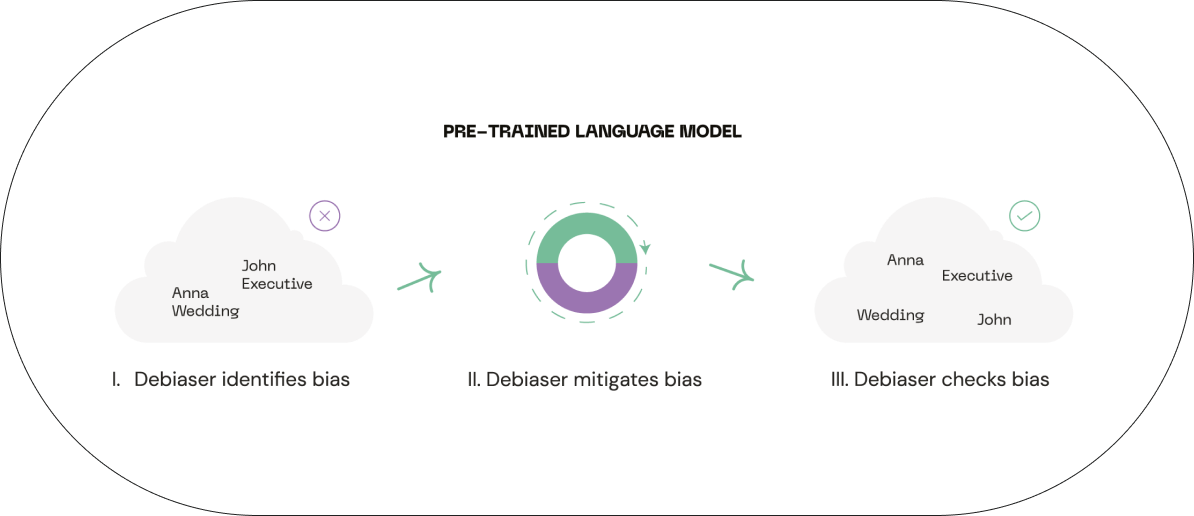

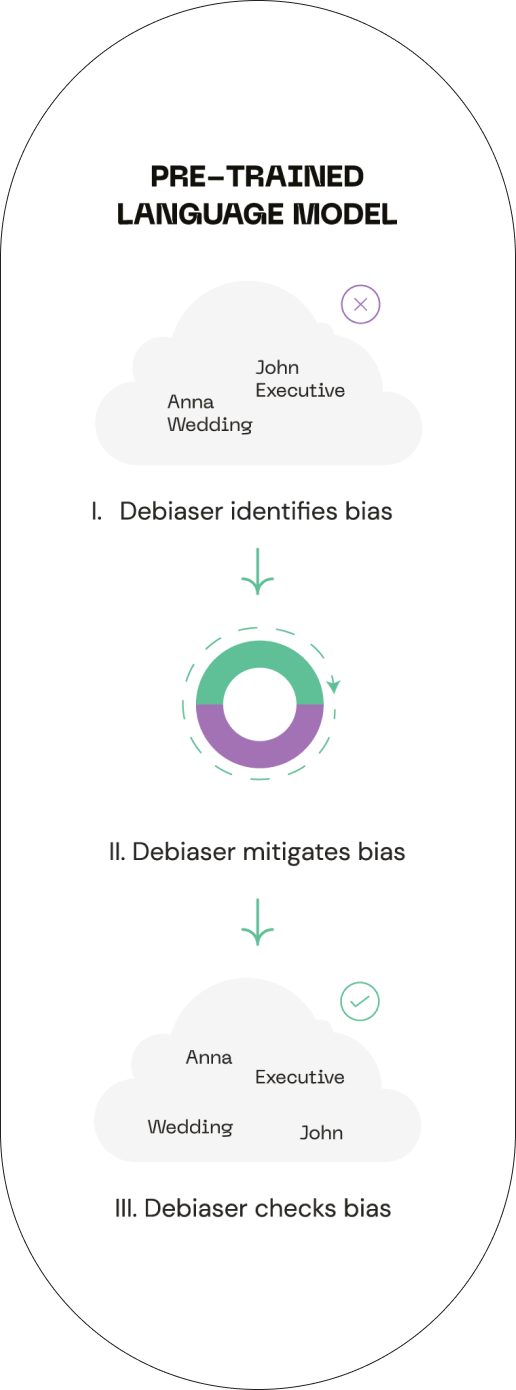

The latest NLP technology is based on so-called pre-trained language models. Being trained on a large corpora of text, such models provide numerical representations of words, making it easier for the computer to handle human language. The Debiaser is our innovative proof-of-concept technology that will provide a toolkit for identifying and mitigating biases in such language models, making them safer to apply in Human Resources Management.

During the recruitment phase, the Debiaser shows which issues of the applications might be subject to discrimination and how to deal with them for bias mitigation.

It also provides human-understandable explanations on the parts that impacted the automated decisions proposed to recruiters, building trust in them.

The challenge of bias in automated recruiting starts with the definition of what fairness means. The Debiaser creates a use-case specific definition of fairness, ensuring similar candidates are treated in a similar way.

Based on the BIAS research, the Debiaser will support identifying and building the domain – and organisation – specific knowledge necessary to implement the Case-based Reasoning, and conducting regular checks to ensure fairness and consistency in the recruitment process.

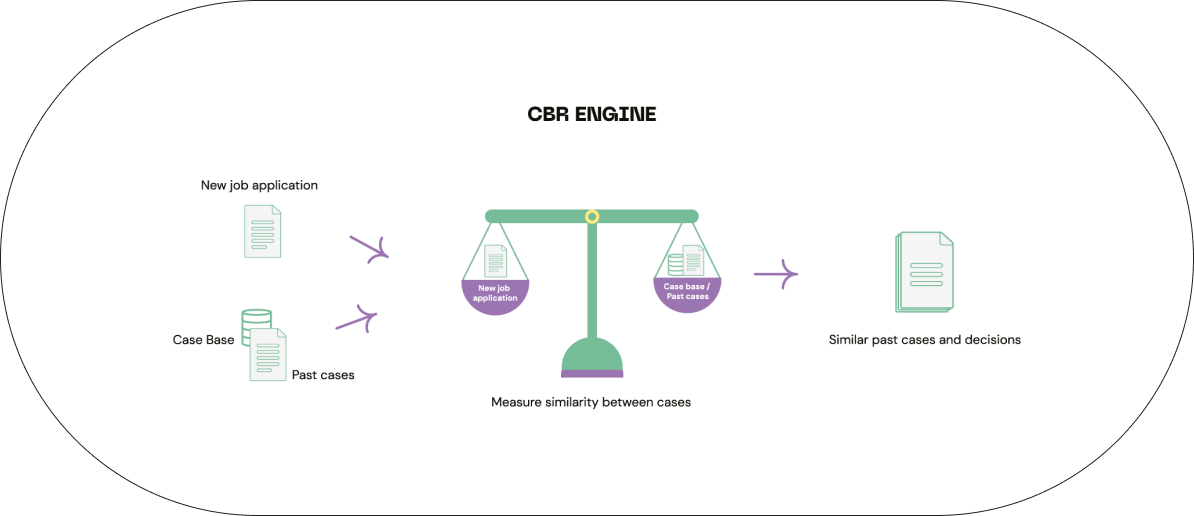

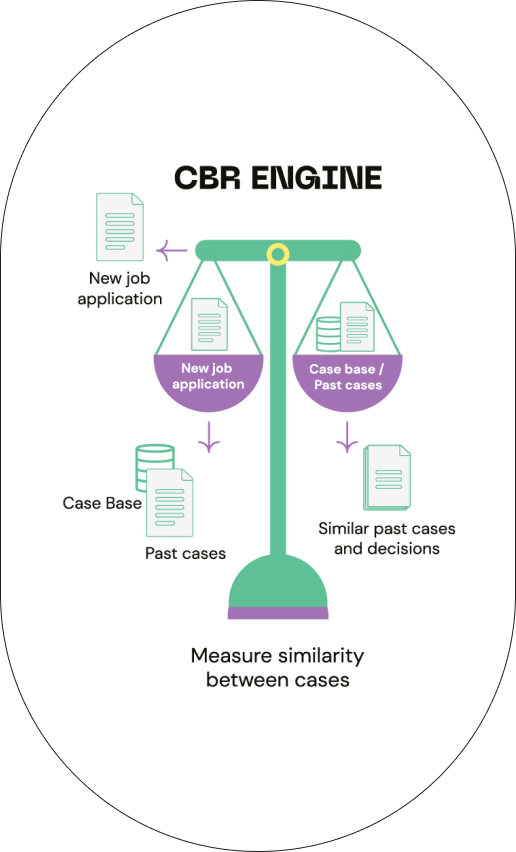

CBR is an AI technique that solves new problems by reusing successful solutions previously applied to similar problems. The two main components of a CBR system are the Case Base which contains the problems solved in the past, and the CBR engine that retrieves similar past cases to the new one and reuses their solutions for solving the new problem. This process can be used to ensure “individual fairness” since similar individuals will be evaluated in a similar way.